Strong Support at Conference for ‘Killer Robot’ Regulation

June 2024

By Michael T. Klare

An international conference that drew more than 1,000 participants, including government officials from 144 nations, showed strong support for regulating autonomous weapons systems, but reached no conclusion on exactly how that should be done, organizers said.

There is a “strong convergence” that these systems “that cannot be used in accordance with international law or that are ethically unacceptable should be explicitly prohibited” and that all other autonomous weapons systems “should be appropriately regulated,” Austrian Foreign Minister Alexander Schallenberg, chair of the Vienna Conference on Autonomous Weapons Systems, said in his closing summary.

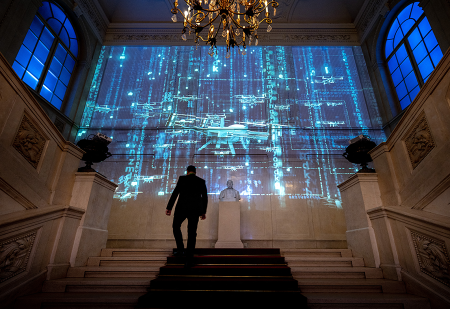

The April 29-30 conference, hosted by Austria, focused attention on the dangers posed by the unregulated deployment of these weapons systems, sometimes called “killer robots,” and on mobilizing support for negotiations leading to legally binding restrictions on such systems.

Schallenberg and other participants expressed concern that, without urgent action, these systems, which can orient themselves on the battlefield and employ lethal force with minimal human oversight, could soon be deployed worldwide, endangering the lives of noncombatants. The foreign minister spoke of a “ring of fire” from the Russian war on Ukraine to the Middle East to the Sahel region of Africa and warned that “technology is moving ahead with racing speed while politics is lagging behind.”

“This is the Oppenheimer moment of our generation,” Schallenberg said. “The preventative window for such action is closing.”

Over two days of plenaries and panels, speakers from academia, civil society, industry, and the diplomatic community addressed the dangers posed by autonomous weapons systems and various strategies for regulating them. Numerous experts warned that once untethered from human control, these systems could engage in unauthorized attacks on civilians or trigger unintended escalatory moves.

As reflected in public statements and panel discussions, there was near-universal agreement that, in the absence of persistent human control, the battlefield use of such systems is inconsistent with international law and human morality. But participants expressed some disagreements over the best way to regulate them.

The conference occurred at a watershed moment in the international drive to impose international controls on autonomous weapons systems. For the past 10 years, a UN group of governmental experts has been meeting under the auspices of the Convention on Certain Conventional Weapons (CCW) to identify the dangers posed by these devices and assess various proposals for regulating them, including a protocol to the CCW banning them altogether.

During these discussions, many participants in the group of experts coalesced around the so-called two-tiered approach, which would ban any lethal autonomous weapons systems that cannot be operated under persistent human control and would impose strict regulations on all other such weapons. But because the CCW operates by consensus, progress toward implementation of these proposals has been blocked by several countries, including Russia and the United States, that oppose legally binding restrictions on these systems.

This impasse has sparked two alternative approaches to their regulation. The advocates of a legally binding instrument, having lost patience with the group of experts, have urged the UN General Assembly to consider such a measure.

In December, the UN General Assembly passed a resolution expressing concern over the dangers posed by these systems and calling on the secretary-general to conduct a study of the topic in preparation for a General Assembly debate scheduled for the fall. (See ACT, December 2023.)

An alternative approach, favored by the United States, the United Kingdom, and several of their allies, calls for the adoption of voluntary constraints on the use of autonomous weapons systems. As affirmed in a document titled “Political Declaration on Responsible Military Use of Artificial Intelligence and Autonomy,” released by the U.S. State Department in November, there are legitimate battlefield uses for such systems as long as they are used in accordance with international law and “within a responsible human chain of command and control.” (See ACT, April 2024.)

Based on statements by panelists and government officials, most participants in the Vienna conference appeared to favor the first approach, abandoning the CCW and pursuing a legally binding instrument, preferably through the General Assembly.

“The process at the CCW is a dead end because of the rule of consensus and the possibility of certain states, like Russia, blocking any meaningful measures on autonomous weapons,” Anthony Aguirre, executive director of the U.S.-based Future of Life Institute, said. “I think we need a new treaty, and that treaty needs to be negotiated in the UN General Assembly.”

Most of the states represented at the conference, including Austria, Belgium, Brazil, Chile, Egypt, Germany, Mexico, Pakistan, Peru, New Zealand, South Africa, and Switzerland, echoed Aguirre’s view, asserting support for negotiations leading to such a treaty. But other states, including Australia, Canada, Italy, Japan, Turkey, the UK, and the United States, indicated that the CCW remains the most appropriate forum for further discussion of autonomous weapons systems regulation.

With the conference behind them, Schallenberg and his allies are looking to preserve the convergence of views that the conference achieved and ensure that it is brought to bear at the General Assembly in September.