"I greatly appreciate your very swift response, and your organization's work in general. It's a terrific source of authoritative information."

On Integrating Artificial Intelligence With Nuclear Control

September 2022

By Peter Rautenbach

Although automation and basic artificial intelligence (AI) have been a part of nuclear weapons systems for decades,1 the integration of modern AI machine learning programs with nuclear command, control, and communications (NC3) systems could mitigate human error or bias in nuclear decision-making. In doing so, AI could help prevent egregious errors from being made in crisis scenarios when nuclear risk is greatest. Even so, AI comes with its own set of unique limitations; if unaddressed, they could raise the chance of nuclear use in the most dangerous manner possible: subtly and without warning.

With Russia’s recent invasion of Ukraine and the resultant new low in Russian-U.S. relations, the threat of nuclear war is again at the forefront of international discussion. To help prevent this conflict from devolving into a devastating use of nuclear weapons, AI systems could be used to assess enormous amounts of intelligence data exponentially faster than a human analyst and without many of the less favorable aspects of human decision-making, such as bias, anger, fear, and prejudice. In doing so, these systems would be providing decision-makers with the best possible information for use in a crisis.

With Russia’s recent invasion of Ukraine and the resultant new low in Russian-U.S. relations, the threat of nuclear war is again at the forefront of international discussion. To help prevent this conflict from devolving into a devastating use of nuclear weapons, AI systems could be used to assess enormous amounts of intelligence data exponentially faster than a human analyst and without many of the less favorable aspects of human decision-making, such as bias, anger, fear, and prejudice. In doing so, these systems would be providing decision-makers with the best possible information for use in a crisis.

China, Russia, and the United States are increasingly accepting the benefits of this kind of AI integration and feeling competitive pressures to operationalize it in their respective military systems.2 Although public or open-source statements on how this would occur within their nuclear programs is sparse, the classic example of the Perimeter, or “Dead Hand,” system established in the Soviet Union during the Cold War and still in use in Russia points to high levels of automation within nuclear systems.

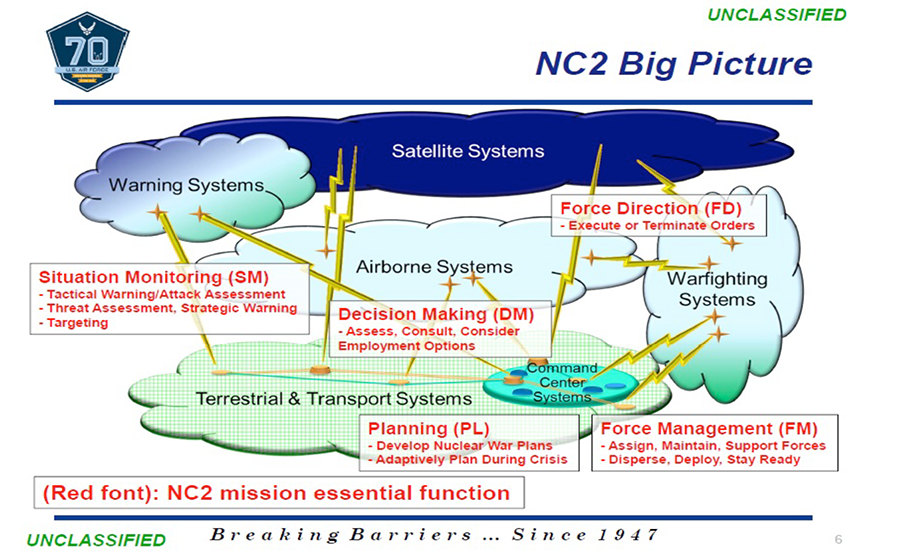

In the United States, there is a desire to modernize the aging NC3 system.3 As the 2018 U.S. Nuclear Posture Review stated, “The United States will continue to adapt new technologies for information display and data analysis to improve support for Presidential decision making and senior leadership consultations.”4 There is no direct mention of AI, but this statement is in line with how AI integration with NC3 systems could take shape. Given competitive drivers, the desire for modernization, and the general growth of AI technology, exploring the ramifications of nuclear integration is rising in importance.

Understanding Artificial Intelligence

The term “AI” invokes a myriad of different definitions, from killer robots to programs that classify images of dogs. What binds these computational processes under the umbrella of artificial intelligence is their ability to solve problems or perform functions that traditionally required human levels of cognition.5 Machine learning is an important subfield of this research as these systems learn “by finding statistical relationships in past data.”6 To do so, they are trained using large data sets of real-world information. For example, image classifiers are shown millions of images of a specific type and form.7 From there, they can look at new images and use their trained knowledge to determine what they are seeing. This enables them to be extremely useful for recognizing patterns and for managing and assessing data for “systems that the armed forces use for intelligence, strategic stability and nuclear risk.”8

This ability to find correlations by continuously sorting through large amounts of data with an unbiased eye is of particular relevance to early-warning systems and to pre-launch detection activities within the nuclear security field. For instance, machine learning systems could produce significant benefits by ensuring accurate, timely information for decision-makers and by recognizing potential attacks. These systems could help assess the increasingly sophisticated data being relayed to the North American Aerospace Defense (NORAD) Command in Colorado9 as part of the early-warning systems used to detect incoming nuclear weapons.

At the same time, unique technical flaws in AI technology stand to create new or rekindle old problems surrounding warning systems. The issue of false positives, or alerting of an attack where there is none, has plagued nuclear security for decades, and there are multiple examples of the near use of a nuclear weapon due to error.10

At the same time, unique technical flaws in AI technology stand to create new or rekindle old problems surrounding warning systems. The issue of false positives, or alerting of an attack where there is none, has plagued nuclear security for decades, and there are multiple examples of the near use of a nuclear weapon due to error.10

Current technical limitations in machine learning systems could compound this problem by causing an AI system to act in an unexpected manner and by providing faulty but seemingly accurate information to decision-makers who are expecting these computational programs to work without serious error in safety-critical environments. This is of particular importance when states, including Russia and the United States, depend on a “launch on warning” strategy,11 which requires that some nuclear weapons must always be ready to be launched quickly in response to a perceived incoming nuclear attack.12

Although always dangerous, crisis scenarios such as the war in Ukraine can exacerbate these problems by creating an error-prone environment in which tensions are high and communications between Moscow and Washington effectively break down. By providing faster and potentially more comprehensive information in a manner that attempts to remove human fallibilities such as anger, fear, and adrenaline, AI programs could help safely navigate such crisis scenarios. Even so, the inherent difficulty that AI systems have when interacting with the complex nuclear decision-making process could result in a conundrum in which the tools meant to increase safety actually increase the danger.

Technical Flaws in the Nuclear Context

To understand the technical limitations of machine learning technology, it is important to recognize that this is not a situation in which the machine breaks or fails to work. Rather, the machine does exactly as instructed, but not what is wanted from its programmers. This “alignment problem,” as Brian Christian wrote in his book of the same name, sees those who employ AI systems as being in “the position of the ‘sorcerer’s apprentice’: we conjure a force, autonomous but totally compliant, give it a set of instructions, then scramble like mad to stop it once we realize our instructions are imprecise or incomplete.”13

In the context of nuclear decision-making, an unaligned AI system could have disastrous consequences. These technical hurdles are why the integration with NC3 systems could be dangerous. In the face of complex operating environments, machine learning systems are often faced with the problem of their own brittleness, the tendency for powerfully intelligent programs to be brought low by slight tweaks or deviations in their data input that they have not been trained to understand.14 This becomes an issue when attempting to employ AI in an ever-changing real-world environment. In one case, despite a high success rate in the lab, graduate students in California found that adding one or two random pixels to the screen would cause the AI system they had trained to consistently beat Atari video games to fall apart.15 In another case, simply rotating objects in an image was sufficient to throw off some of the best AI image classifiers.16 An AI system could be told to provide accurate information in a nuclear security context, but due to its brittleness, it either fails at this task or, more dangerously, provides inaccurate information under the guise of accuracy.

The use of data sets to train machine learning programs is another serious vulnerability as inadequate data sets result in these systems being unreliable.17 One major contributing factor to this problem in nuclear security stems from the use of simulated training data. Although there are extensive records associated with the launch of older ballistic missiles, newer, less tested models may require the use of simulation.18 The lack of data in terms of real-world offensive nuclear use is undoubtedly fortunate for the world, but it nevertheless means that much of the data involved in training machine learning programs for NC3 systems will be artificially simulated. The resulting dilemma means that AI systems can be “deceptively capable, performing well (sometimes better than humans) in some laboratory settings, then failing dramatically under changing environmental conditions in the real world.”19

Human Bias in Artificial Intelligence

Furthermore, during the training processes used to create machine learning systems, the bias of the humans doing the training can be unintentionally and easily taught to the AI program. Even if a machine learning system can accurately read the data, any trained human biases within the machine can lead to an over- or undervaluing of any information the system assesses.

A prime example of human bias finding its way into an AI system occurred when Amazon used one of these systems to filter the résumés of potential job candidates, only to shut it down because the system was found to have a significant bias toward male applicants.20 The AI system was trained by observing patterns in résumés of previously successful candidates, most of which came from men.21 As a result, the AI learned to place a higher value on male candidates, which not only reflected the domination of men in the industry but also worked to perpetuate it.22

One can easily imagine similar problems occurring in AI systems trained to observe the actions of adversarial nations for the purpose of early warning or the pre-launch detection of nuclear weapons. Human bias could sneak into the system, and the data relating to certain actors or variables would cease to accurately reflect what is occurring in the real world. Extra weight could be placed on standard activity and result in an AI warning of a potential incoming nuclear attack. A U.S. president and military commanders would be expecting a balanced, fair calculus when in truth the machine is perpetuating the kind of bias that could lead to catastrophic miscalculation and further the risk of responding to false positives.

One can easily imagine similar problems occurring in AI systems trained to observe the actions of adversarial nations for the purpose of early warning or the pre-launch detection of nuclear weapons. Human bias could sneak into the system, and the data relating to certain actors or variables would cease to accurately reflect what is occurring in the real world. Extra weight could be placed on standard activity and result in an AI warning of a potential incoming nuclear attack. A U.S. president and military commanders would be expecting a balanced, fair calculus when in truth the machine is perpetuating the kind of bias that could lead to catastrophic miscalculation and further the risk of responding to false positives.

A Policy Answer for a Technical Problem

Despite such concerns, it is likely that the integration of AI within the NC3 structure in the United States and other countries will continue. Crucially, states and militaries do not want to fall behind their competitors in the AI race.23 Furthermore, the potential benefits of this integration stand to stabilize many aspects of the nuclear command system.

Although technical solutions may be developed to lessen the impact of these limitations, the sheer complexity of AI machines and the pressure to integrate them with military systems mean that these problems must be addressed now. The heavy incentive for states to start incorporating the technology as soon as possible means they may have to deal with imperfect systems playing critical roles in nuclear command. In the end, however, the real problem is not the AI per se, but that a rushed integration of AI with nuclear systems that does not take into consideration that the technical limitations of current AI technology and the complexity of nuclear security could increase the risk of nuclear use.

Approaching this challenge from a policy standpoint could mitigate the risks posed by this integration. Specifically, that would mean adopting a nuclear policy that expands the decision-making time for launching a nuclear weapon, such as by moving away from launch-on-warning strategies or by “de-alerting” silo-based intercontinental ballistic missiles. By increasing the amount of time to ready nuclear weapons for use, these policies could allow more opportunity for leaders to assess the nuclear security-related information being provided by the AI. Even if policy is not changed, the simple understanding that these limitations exist could lead to higher levels of training and a healthy degree of skepticism among the people who operate and employ these

AI systems.

AI has the potential to drastically improve safety and lower the chance of nuclear use if integrated with NC3 systems. It is essential that governments approach this integration with open eyes and acknowledge that the AI part of the loop is not always or even fundamentally better in its judgment when compared to its human counterparts. This understanding will be key to harnessing AI successfully in pursuit of a safer future.

ENDNOTES

1. Page O. Stoutland, “Artificial Intelligence and the Modernization of US Nuclear Forces,” in The Impact of Artificial Intelligence on Strategic Stability and Nuclear Risk: Volume 1, Euro-Atlantic Perspectives, ed. Vincent Boulanin (Stockholm International Peace Research Institute (SIPRI), May 2019), pp. 64–65, https://www.sipri.org/sites/default/files/2019-05/sipri1905-ai-strategic-stability-nuclear-risk.pdf.

2. Jill Hruby and Nina Miller, “Assessing and Managing the Benefits and Risks of Artificial Intelligence in Nuclear-Weapon Systems,” Nuclear Threat Initiative, August 26, 2021, p. 3, https://www.nti.org/wp-content/uploads/2021/09/NTI_Paper_AI_r4.pdf.

4. U.S. Department of Defense, “Nuclear Posture Review Report,” April 2010, p. 58, https://dod.defense.gov/Portals/1/features/defenseReviews/NPR/2010_Nuclear_Posture_Review_Report.pdf.

5. Hruby and Miller, “Assessing and Managing the Benefits and Risks of Artificial Intelligence in Nuclear-Weapon Systems,” p. 5.

6. Vincent Boulanin et al., “Artificial Intelligence, Strategic Stability and Nuclear Risk,” SIPRI, June 2020, p. 9, https://www.sipri.org/sites/default/files/2020-06/artificial_intelligence_strategic_stability_and_nuclear_risk.pdf.

9. Hruby and Miller, “Assessing and Managing the Benefits and Risks of Artificial Intelligence in Nuclear-Weapon Systems,” p. 15.

10. Paul Scharre, Army of None (New York: W.W. Norton & Co., 2019), p. 174.

11. Steven Starr et al., “New Terminology to Help Prevent Accidental Nuclear War,” Bulletin of the Atomic Scientists, September 29, 2015, https://thebulletin.org/2015/09/new-terminology-to-help-prevent-accidental-nuclear-war/.

12. Scharre, Army of None, p. 175; Starr et al., “New Terminology to Help Prevent Accidental Nuclear War.”

13. Brian Christian, The Alignment Problem: Machine Learning and Human Values (New York: W.W. Norton, 2020), pp. 12-13.

14. Abhijit Mahabal, “Brittle AI: The Connection Between Eagerness and Rigidity,” Medium, September 21, 2019, https://towardsdatascience.com/brittle-ai-the-connection-between-eagerness-and-rigidity-23ea7c70cb9f.

15. Douglas Heaven, “Deep Trouble for Deep Learning,” Nature, October 1, 2019, p. 165.

17. Michael Horowitz and Paul Scharre, “AI and International Stability: Risks and Confidence-Building Measures,” Center for a New American Security, January 12, 2021, p. 7, https://s3.us-east-1.amazonaws.com/files.cnas.org/documents/AI-and-International-Stability-Risks-and-Confidence-Building-Measures.pdf.

18. Hruby and Miller, “Assessing and Managing the Benefits and Risks of Artificial Intelligence in Nuclear-Weapon Systems,” p. 7.

19. Horowitz and Scharre, “AI and International Stability,” p. 7.

20. Jeffrey Dastin, “Amazon Scraps Secret AI Recruiting Tool That Showed Bias Against Women,” Reuters, October 10, 2018.

23. Horowitz and Scharre, “AI and International Stability,” p. 5.